One of the superior developments in chipmaking in recent times must be chiplets and stacking stated chiplets on high of each other. The chances, as they are saying, are infinite. AMD confirmed how gaming body charges could be bolstered by stacking extra cache onto a processor with the Ryzen 7000X3D CPUs (opens in new tab) at CES 2023, but it surely additionally had one thing equally as spectacular for the info centre of us.

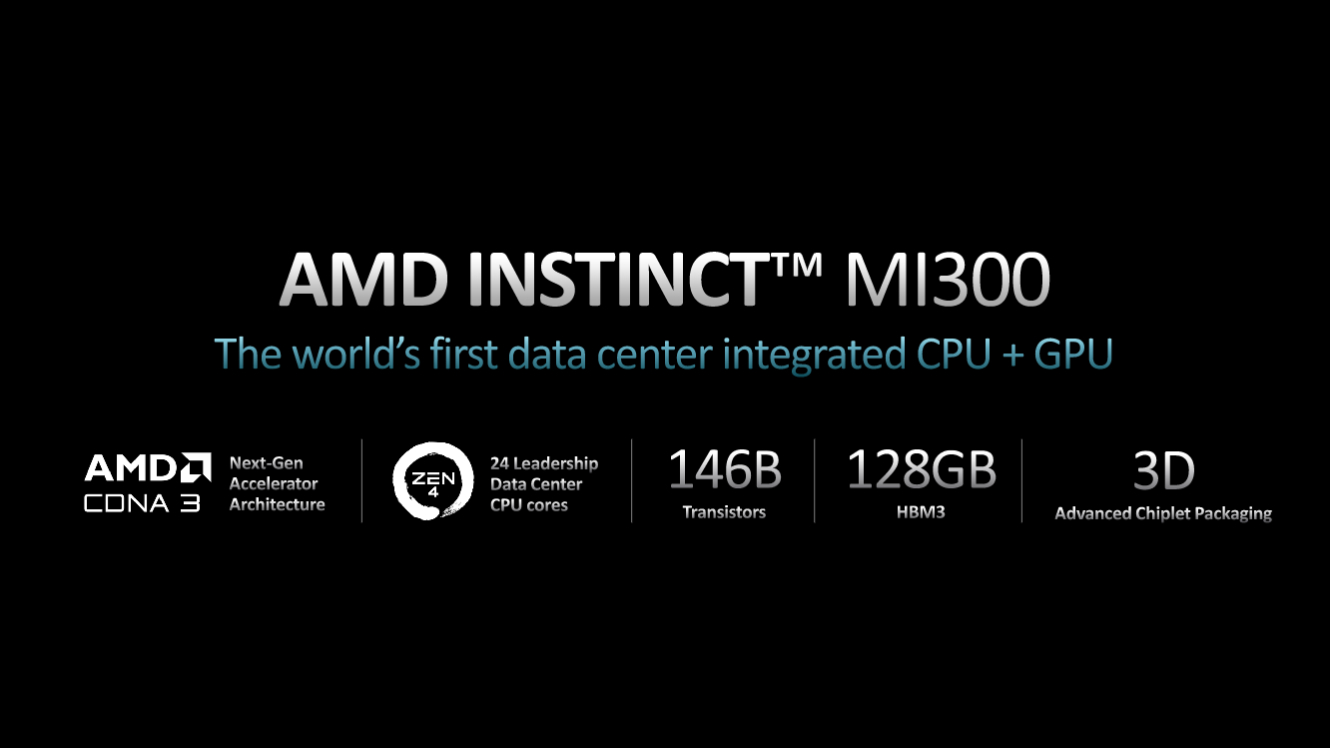

AMD is utilizing its 3D chip-stacking tech to mix a CPU and GPU onto one completely mammoth chip: the AMD Intuition MI300.

It isn’t simply that this chip has each a CPU and GPU on it. That is not notably noteworthy lately—principally the whole lot we contemplate a CPU these days has a GPU built-in into it. AMD’s no stranger both, it has been making APUs for years—basically chips with each CPU and GPU underneath one roof. That is truly what AMD defines the MI300 as: an APU.

However what’s cool about AMD’s newest APU is the dimensions of the factor. It is large.

This can be a information centre accelerator that comprises 146 billion transistors. That is very almost double the dimensions of Nvidia’s AD102 GPU at 76.3 billion—the GPU discovered contained in the RTX 4090. And this factor is big. In truth, AMD’s CEO Dr. Lisa Su held the MI300’s chip up on stage at CES and both she’s shrunk or this factor is the dimensions of a decently sized stroopwafel. The cooling for this factor have to be immense.

The chip delivers a GPU born out of AMD’s CDNA 3 structure. That is a model of its graphics structure constructed just for compute efficiency—RDNA for gaming, CDNA for compute. That is packaged alongside a Zen 4 CPU and 128GB of HBM3 reminiscence. The MI300 comes with 9 5nm chiplets on high of 4 6nm chiplets. That implies 9 CPU or GPU chiplets (seems to be six GPU chiplets and three CPU chiplets) on high of what’s presumed to be the four-piece base die it is all loaded onto. Then there’s reminiscence across the edges of that. To this point, no specifics have been given as to its precise make up, however we do know it is all linked up by a 4th Gen Infinity interconnect structure.

The thought being that should you load the whole lot onto one bundle with fewer hoops for information to leap by means of to get round, you find yourself with a extremely environment friendly product in comparison with one which’s maybe making a great deal of calls to reminiscence off-chip, slowing down the entire course of. Computing at this stage is all about bandwidth and effectivity, so this kind of strategy makes a number of sense. It is the identical kind of precept to one thing like AMD’s Infinity Cache on its RDNA 2 and three GPUs. Having extra information shut at hand reduces the necessity to go additional afield for pertinent information and helps hold body charges excessive.

However there are just a few the explanation why we do not have an MI300-style accelerator for gaming. For one, affordable expectations for many avid gamers’ budgets would recommend we won’t afford no matter AMD’s seeking to cost for an MI300. It’ll be loads. Equally, nobody’s fairly cracked the right way to program a sport to see a number of compute chips on a gaming GPU as a single entity with out particular coding for it. We have been there with SLI and CrossFire, and it did not finish properly.

However sure, the MI300 is extraordinarily massive and very highly effective. AMD is touting round an eight instances enchancment in AI efficiency versus its personal Intuition MI250X accelerator. And should you’re questioning, the MI250X can be a multi-chiplet monster with 58B transistors—however that after spectacular quantity simply appears somewhat small now. Principally, that wasn’t a straightforward chip to beat, however the MI300 does and it is also 5 instances extra environment friendly by AMD’s making.

The MI300 is coming within the second half of the 12 months, so a methods off but. That stated, it is extra of one thing to marvel at than truly splash out for. Except you’re employed at a knowledge centre or work in AI and have entry to mega bucks, that’s.